Key Takeaways

- 90% of AI startups fail within the first 18 months due to fundamental product development mistakes, not technology limitations

- Most AI MVP failures stem from building AI-first instead of problem-first, resulting in solutions searching for problems

- User trust and reliability matter more than cutting-edge AI capabilities—inconsistent performance kills adoption faster than feature gaps

- Product-market fit validation must happen before scaling—65% of failed AI MVPs never validated willingness to pay

- UX complexity is the silent killer—users abandon AI products that require more cognitive effort than traditional solutions

- Competitive differentiation requires 10x better performance, not incremental improvements in crowded categories

- Regulatory readiness and ethical AI frameworks must be built from day one in healthcare, finance, and legal sectors

Introduction: The AI Gold Rush and Its Hidden Casualties

Why AI MVPs fail has become one of the most critical questions in the startup ecosystem as we navigate 2025’s artificial intelligence revolution. While venture capital pours billions into AI startups—with global AI funding reaching $87.4 billion in 2025—the failure rate tells a sobering story that few discuss openly.

The numbers paint a stark picture. According to recent industry analysis, approximately 90% of AI startups fail within their first 18 months. Yet the headlines celebrate the unicorns while the quiet shutdowns, pivots, and acqui-hires happen behind closed doors, leaving founders, investors, and teams wondering what went wrong.

The reality? Most AI MVPs don’t fail because of inadequate technology or insufficient funding. They fail because founders skip fundamental product development principles in their rush to capitalize on AI hype. They build sophisticated models before validating basic user problems. They prioritize demo-day impressions over real-world utility. They confuse technical capability with market readiness.

This comprehensive analysis examines 12 high-profile AI MVP failures, extracting critical lessons from companies that collectively raised over $1 billion but still couldn’t achieve sustainable traction. More importantly, we’ll reveal the proven framework successful AI founders use to build products that customers actually need, trust, and pay for.

Whether you’re launching your first AI product or pivoting an existing solution, understanding why AI MVPs fail will save you months of wasted development time, hundreds of thousands in capital, and the emotional toll of building something nobody wants.

The Anatomy of AI MVP Failure: Understanding the Core Problem

The Fundamental Disconnect

Before examining specific failures, it’s essential to understand the systematic problem plaguing AI product development. Traditional software MVPs follow a proven path: identify a painful problem, build the minimum solution, validate with users, iterate based on feedback, and scale what works.

AI MVPs, however, often flip this model. Founders discover a fascinating AI capability (language models, computer vision, predictive analytics) and search for problems to apply it to. This backward approach—technology-first rather than problem-first—creates products that are technically impressive but commercially irrelevant.

The Five Pillars of AI MVP Success

Research across successful and failed AI products reveals five critical pillars that determine outcomes:

1. Problem Validation Depth

- Clear identification of specific user pain points

- Quantified cost of the problem (time, money, frustration)

- Evidence of existing workarounds or manual solutions

- Willingness to pay threshold established pre-development

2. Technical Reliability and Trust

- Consistent performance across edge cases and real-world scenarios

- Transparent AI decision-making with explainability features

- Graceful failure handling and user override capabilities

- Security, privacy, and compliance frameworks from day one

3. User Experience Excellence

- Intuitive onboarding that doesn’t require AI understanding

- Cognitive load reduction compared to existing solutions

- Clear value demonstration within first 5 minutes of use

- Seamless integration into existing workflows and tools

4. Market Differentiation Strategy

- 10x better performance on specific use cases, not 10% improvement

- Defensible competitive moat beyond model selection

- Network effects or data advantages that compound over time

- Clear positioning that isn’t “X but with AI”

5. Business Model Viability

- Monetization validated before scaling investment

- Unit economics that support sustainable growth

- Customer acquisition cost aligned with lifetime value

- Multiple revenue stream opportunities identified

When AI MVPs fail, they typically collapse on multiple pillars simultaneously. Let’s examine real examples.

12 High-Profile AI MVP Failures: Deep Dive Analysis

1. Humane AI Pin: $230M Raised, <10,000 Units Sold

The Vision: Founded by former Apple design executives Imran Chaudhri and Bethany Bongiorno, Humane set out to replace smartphones entirely with an AI-powered wearable that projected information onto your hand and responded to voice and gesture commands.

The Reality: Despite backing from OpenAI CEO Sam Altman and raising over $230 million, the AI Pin suffered from fundamental execution failures. The device overheated during normal use, battery life barely lasted half a day, response times lagged noticeably, and the projected display was nearly impossible to read in bright light.

Critical Mistakes:

- Engineering feedback ignored: Multiple engineers warned leadership about hardware readiness issues months before launch, but concerns were dismissed to meet publicity deadlines

- Solution seeking problems: The AI Pin solved no specific pain point better than existing smartphones—it merely offered a different form factor

- UX fundamentals violated: Projecting onto skin proved impractical in real-world conditions, requiring users to contort their hands and find specific lighting

- Value proposition unclear: Reviewers and users couldn’t articulate why they’d use the Pin over their phones for any specific task

Market Outcome: Fewer than 10,000 units sold despite extensive marketing. HP acquired Humane at a significant discount just 10 months after launch and immediately discontinued the product.

Key Lesson: Vision and capital cannot compensate for skipping fundamental product validation. Even with exceptional pedigree and resources, building a solution before identifying a clear problem leads to spectacular failure.

2. Forward CarePods: $650M Healthcare Vision Meets Reality

The Vision: Forward’s autonomous “CarePods” promised to revolutionize healthcare access through AI-powered diagnostic kiosks. Patients would enter these pods, receive comprehensive health screenings via AI analysis, and get immediate results without human clinical staff.

The Reality: The CarePods experienced catastrophic technical failures including botched blood draws that injured patients, software crashes that locked patients inside pods, and diagnostic accuracy rates that fell far below acceptable medical standards. The company shut down all operations in late 2024.

Critical Mistakes:

- Complexity without reliability: Healthcare requires near-perfect execution—Forward delivered experimental technology that frequently malfunctioned in high-stakes scenarios

- Trust deficit: Users refused to trust AI-only diagnostics for serious health concerns, even with human oversight options

- Regulatory underestimation: The team didn’t adequately account for medical device regulations, liability concerns, and clinical validation requirements

- Product-market fit assumption: Forward raised hundreds of millions based on investor excitement rather than validated demand from actual patients or healthcare systems

Market Outcome: Complete shutdown after burning through $650+ million, with no acquisition or technology transfer. Patients who prepaid for annual memberships lost their investments.

Key Lesson: In regulated, high-stakes industries, fundraising success doesn’t equal product readiness. User trust, operational soundness, regulatory compliance, and demonstrated demand must precede scale—especially when lives are at stake.

3. Artifact: Instagram Founders’ News App Nobody Needed

The Vision: Created by Instagram co-founders Kevin Systrom and Mike Krieger, Artifact aimed to become the AI-powered news feed that understood your interests better than any human curator. With 160,000+ users on the waitlist and sleek design, expectations were astronomical.

The Reality: Artifact shut down in January 2024 after just over one year of operation despite strong initial interest. User engagement remained stubbornly low, with most users checking the app once and never returning.

Critical Mistakes:

- Behavioral inertia underestimated: Users already had news consumption habits through Twitter/X, Reddit, Apple News, and Google News—Artifact’s incremental improvement wasn’t compelling enough to change these patterns

- Differentiation failure: AI-powered curation sounded innovative but didn’t deliver meaningfully better results than algorithmic feeds users already trusted

- Value proposition weakness: The product couldn’t answer “Why would I use this instead of my current solution?” convincingly

- Habit formation failure: No compelling hook to make Artifact part of users’ daily routines—checking the app felt optional, not essential

Market Outcome: Voluntary shutdown after founders acknowledged they couldn’t achieve the scale needed to sustain operations. The technology and team didn’t get acquired, suggesting limited commercial value.

Key Lesson: In crowded categories with established user behaviors, being marginally better isn’t enough. You must be 10x better on a specific dimension or solve a distinct pain point that existing solutions miss entirely. Incremental AI improvements don’t justify behavior change.

4. Vy by Vercept: Agentic AI That Couldn’t Keep Promises

The Vision: Vy positioned itself as your universal AI assistant—an agentic system that could autonomously book meetings, send emails, manage tasks across applications, and handle complex workflows with minimal human input. The company secured $16 million in funding led by top-tier VCs betting on the “AI agent” wave.

The Reality: When users actually tested Vy in their real workflows, the experience fell apart. Tasks failed midway without clear error messages. Browser extensions crashed silently. Integrations with Gmail, Notion, and Slack were brittle and unreliable. The AI made unpredictable decisions that broke user trust.

Critical Mistakes:

- Autonomy without reliability: Users delegated tasks expecting consistent results but got unpredictable outcomes that required constant supervision

- Transparency failure: When things went wrong, users couldn’t understand why or how to prevent future failures

- Scope too broad: Trying to be a universal assistant meant being mediocre at everything instead of excellent at specific workflows

- Trust recovery absent: After one or two failed tasks, users stopped trusting the system and abandoned it entirely

Market Outcome: Usage plummeted within weeks of launch as churn skyrocketed. The company pivoted multiple times before ultimately shutting down operations.

Key Lesson: Agentic AI requires bulletproof reliability. When users delegate authority to AI, they expect predictable outcomes, clear communication, and graceful failure handling. Break that trust once, and they won’t give you a second chance. Better to excel at one specific workflow than fail at many.

5. Niki.ai: Conversational Commerce Before Its Time

The Vision: Indian startup Niki.ai raised $2 million to build a chatbot that enabled users to book movie tickets, pay bills, order food, and shop through conversational AI on WhatsApp and other messaging platforms.

The Reality: Despite functional technology and initially positive reception, Niki shut down by 2021. The Indian market wasn’t ready for conversational commerce at scale, and Niki couldn’t overcome infrastructure and behavioral barriers.

Critical Mistakes:

- Infrastructure dependency: Unreliable internet in Tier 2 and Tier 3 cities made chatbot interactions frustrating rather than convenient

- Trust and security concerns: Indian consumers were uncomfortable making financial transactions through bots, preferring established app interfaces

- Regional language limitations: Inconsistent support for regional languages limited market reach in a linguistically diverse country

- Entrenched competition: Apps like Paytm and Flipkart already owned the user relationships and trusted payment flows

Market Outcome: Gradual user decline followed by complete shutdown. No acquisition or technology transfer.

Key Lesson: Timing and market readiness matter enormously. AI MVPs in emerging markets must deeply understand infrastructure limitations, cultural trust factors, and competitive dynamics. Novelty alone doesn’t overcome fundamental friction points in user experience or market infrastructure.

6. Peltarion: Enterprise AI Platform Outpaced by Open Source

The Vision: Peltarion launched to democratize deep learning for enterprises, offering a full-stack platform that let non-technical teams deploy machine learning without writing code. They counted NASA, Tesla, and major Scandinavian institutions among their clients.

The Reality: Despite technical sophistication and real enterprise traction, Peltarion never achieved sustainable growth. Open-source alternatives like TensorFlow, PyTorch, and Hugging Face grew rapidly in capability and adoption—often for free—while Peltarion’s paid platform struggled to justify its premium pricing.

Critical Mistakes:

- No defensible moat: The platform’s technical advantages eroded quickly as open-source tools improved and developer communities grew

- Complexity-accessibility tension: The platform was simultaneously too complex for true non-technical users and not flexible enough for sophisticated developers

- Enterprise sales friction: Long sales cycles and high customer acquisition costs without sticky engagement or network effects

- Talent vs. platform value: Acquirers wanted the team’s expertise, not the platform technology itself

Market Outcome: Acquired by King (maker of Candy Crush) in 2022 for talent and IP. The Peltarion platform was shut down within months, and the brand disappeared.

Key Lesson: Enterprise AI tools need more than great engineering—they need defensible competitive advantages that compound over time. Without network effects, proprietary data, or irreplaceable workflows, even well-executed platforms get commoditized by open-source alternatives and better-capitalized competitors.

7. CodeParrot: YC-Backed Dev Tool Lost to GitHub Copilot

The Vision: CodeParrot, a Y Combinator Winter 2023 startup, aimed to help developers code faster with an AI-powered assistant. With strong initial interest and YC backing, the team expected rapid adoption.

The Reality: CodeParrot entered a market already dominated by GitHub Copilot, with native GPT-4 integrations appearing in popular IDEs. The product couldn’t differentiate meaningfully, and users defaulted to trusted tools with deeper integrations.

Critical Mistakes:

- Late-market entry: By launch, GitHub Copilot had already established category dominance with better data, integrations, and user trust

- No unique value proposition: Suggestions weren’t significantly better, faster, or more contextually relevant than existing tools

- Integration depth lacking: Couldn’t match Copilot’s native IDE integrations or workflow seamlessness

- Retention failure: Users tried it once, saw marginal improvements, and reverted to familiar tools

Market Outcome: Shut down within two years of launch despite YC pedigree and initial enthusiasm. No acquisition or technology transfer.

Key Lesson: In developer tools, utility trumps novelty. Developers won’t switch tools for marginal improvements—you must 10x a core workflow or integrate so seamlessly that switching back feels painful. Late-market entries need clear, defensible differentiation, not just “we’re AI-powered too.”

8. IBM Watson for Oncology: Enterprise AI’s $62M Lesson

The Vision: IBM partnered with Memorial Sloan Kettering Cancer Center to build Watson for Oncology, an AI system that would recommend cancer treatment plans by analyzing medical literature and patient data. Major hospitals worldwide adopted it with high expectations.

The Reality: By 2018, multiple hospitals discontinued Watson for Oncology after discovering it recommended unsafe and incorrect treatments. The AI had been trained primarily on hypothetical cases rather than real patient outcomes, leading to recommendations that contradicted clinical evidence.

Critical Mistakes:

- Training data mismatch: System trained on synthetic cases rather than comprehensive real-world outcomes

- Validation failure: Insufficient clinical validation before deployment in life-or-death scenarios

- Transparency issues: Doctors couldn’t understand why Watson made specific recommendations, making it impossible to trust

- Overpromising capabilities: Marketing suggested Watson could match or exceed oncologist expertise—reality fell catastrophically short

Market Outcome: Major healthcare institutions including MD Anderson Cancer Center terminated contracts after spending $62 million. IBM eventually sold Watson Health at a significant loss in 2021.

Key Lesson: Healthcare AI demands extraordinary validation standards. Training data must reflect real-world complexity, recommendations must be explainable to clinicians, and performance must be validated against actual patient outcomes—not synthetic benchmarks. Overpromising in healthcare doesn’t just fail commercially; it damages lives and erodes trust in AI broadly.

9. Quill by Narrative Science: AI Writing Before Product-Market Fit

The Vision: Quill by Narrative Science offered AI-generated business intelligence reports, transforming data into natural language narratives for enterprises. The company raised $53 million and attracted Fortune 500 clients.

The Reality: While technically capable, Quill struggled to demonstrate clear ROI. The generated reports required significant human editing, and clients questioned whether the AI added enough value to justify the cost compared to human analysts.

Critical Mistakes:

- Value proposition ambiguity: Clients couldn’t quantify clear time or cost savings versus traditional reporting

- Quality inconsistency: Generated narratives varied in quality and often missed nuanced insights human analysts would catch

- Integration complexity: Required significant setup and data pipeline work before delivering value

- Monetization challenges: Pricing model didn’t align with perceived value, making renewals difficult

Market Outcome: Acquired by Salesforce in 2021, but the Quill product was discontinued shortly after. Technology and team absorbed into Salesforce’s broader AI initiatives.

Key Lesson: AI products must demonstrate clear, quantifiable ROI that justifies their cost. “AI-generated” isn’t inherently valuable—it must save significant time, reduce costs, or improve outcomes measurably. When AI outputs require substantial human review, the value proposition collapses.

10. Blippar: $150M AR/AI Vision That Couldn’t Monetize

The Vision: Blippar combined augmented reality and AI to let users scan objects and get instant information. The company raised over $150 million and partnered with major brands like Coca-Cola and General Mills for AR marketing campaigns.

The Reality: Despite impressive technology and high-profile partnerships, Blippar couldn’t find a sustainable business model. B2B marketing campaigns were one-off projects without recurring revenue. B2C adoption never reached critical mass because users had no reason to habitually scan products.

Critical Mistakes:

- Business model mismatch: Technology impressive but use cases didn’t generate recurring revenue

- Behavior change failure: Users wouldn’t habitually scan products without clear, consistent value

- Platform dependency: Relied on brands paying for AR campaigns, but brands viewed it as experimental marketing, not core strategy

- Competitor emergence: Google Lens and other tech giants entered the visual search space with free alternatives

Market Outcome: Filed for administration (UK bankruptcy) in 2018 after burning through $150+ million. Assets acquired at fraction of previous valuation.

Key Lesson: Impressive technology needs a sustainable business model. One-off projects and experimental marketing budgets don’t build sustainable companies. AI/AR products must create enough consistent value that users or businesses build them into regular workflows—and are willing to pay predictably for that value.

11. Clarifai’s Consumer Pivot Failure: Enterprise Focus Shift

The Vision: Clarifai started as a consumer-facing visual recognition app, winning TechCrunch Disrupt in 2013. Users could upload images and get AI-powered descriptions and tags. The company raised significant venture funding.

The Reality: Despite initial consumer enthusiasm, Clarifai couldn’t monetize its consumer product. User engagement dropped as the novelty wore off—people didn’t have consistent use cases for image recognition. The company ultimately pivoted entirely to enterprise B2B solutions.

Critical Mistakes:

- No recurring use case: Consumer users tried the app out of curiosity but had no reason to return regularly

- Monetization unclear: No obvious path to charge consumers for occasional image recognition

- Value demonstration failure: Couldn’t articulate clear problems being solved in users’ daily lives

- Competition from platforms: Google Photos and Apple Photos integrated similar capabilities for free

Market Outcome: Successful pivot to enterprise B2B, but the consumer product was completely abandoned. The company found sustainable business serving developers and enterprises, not consumers.

Key Lesson: Novelty creates initial engagement but doesn’t sustain products. AI MVPs need clear, recurring use cases that solve ongoing problems. When you can’t identify why users would return tomorrow, next week, and next month, you don’t have a product—you have a demo.

12. Meta’s Galactica: AI Research Model Shut Down in 3 Days

The Vision: Meta released Galactica, a large language model trained specifically on scientific literature, designed to help researchers write papers, solve equations, and access scientific knowledge. The company positioned it as a revolutionary research tool.

The Reality: Within 72 hours of public release, Meta shut down Galactica’s demo after severe backlash. The model confidently generated false scientific “facts,” made up citations, and produced biased outputs—all presented with authoritative certainty that made it dangerous for actual research use.

Critical Mistakes:

- Insufficient safety testing: Released publicly without adequate red-team testing for misinformation and bias

- Overconfident outputs: Model presented false information with the same certainty as accurate facts, with no uncertainty quantification

- Use case mismatch: Scientific research demands high accuracy and transparency—Galactica delivered neither consistently

- Reputation damage: Meta’s credibility in AI research suffered from the hasty release and retreat

Market Outcome: Demo shut down within 3 days. Project abandoned entirely with significant reputation damage to Meta’s AI research division.

Key Lesson: AI products in high-stakes domains require extraordinary safety standards and transparency. Models that confidently generate false information are worse than useless—they’re dangerous. Rushing to market without adequate testing destroys trust and credibility that takes years to rebuild.

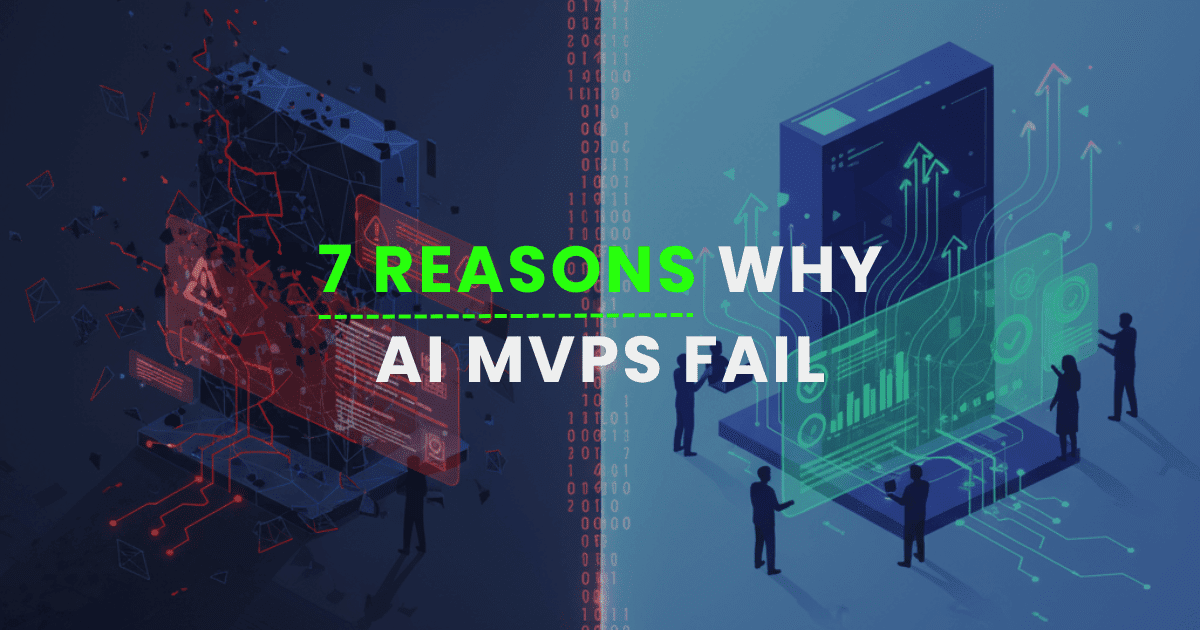

Why AI MVPs Fail: The 7 Systematic Root Causes

1. Building AI-First Instead of Problem-First

The Pattern: Founders discover a fascinating AI capability (GPT-4’s language generation, computer vision models, predictive analytics) and then search for problems to apply it to. This creates solutions searching for problems rather than solutions addressing validated needs.

Why It Fails:

- Users don’t care about your AI—they care about their problems being solved

- Technology-first thinking leads to features users don’t need or want

- Value proposition becomes “it uses AI” rather than “it saves you X hours/dollars”

- Competitive differentiation evaporates as AI capabilities become commoditized

Prevention Strategy:

- Spend 10x more time understanding user problems than exploring AI capabilities

- Validate that users experience significant pain with current solutions

- Confirm users are already spending time/money trying to solve the problem

- Build AI only after identifying specific problems it solves better than alternatives

2. Poor UX and Onboarding Complexity

The Pattern: AI MVPs often have impressive backend technology but terrible user experiences. Onboarding requires understanding AI concepts. Interfaces are cluttered with technical settings. Users can’t figure out what the product does or how to use it within the critical first 5 minutes.

Why It Fails:

- Users abandon products they can’t understand quickly

- Cognitive load higher than traditional solutions kills adoption

- Technical jargon alienates non-technical users (most of your market)

- Complex setup processes prevent momentum and word-of-mouth growth

Prevention Strategy:

- Design onboarding for non-technical users who’ve never heard of AI

- Deliver obvious value within first 60 seconds of interaction

- Hide technical complexity behind intuitive interfaces

- Test with users who know nothing about your product or AI generally

3. Weak Differentiation in Crowded Markets

The Pattern: Founders build “X but with AI” products—existing categories with AI capabilities bolted on. These products offer marginal improvements (10-20% better) rather than transformational value. Users can’t articulate why they’d switch from established solutions.

Why It Fails:

- Behavior change requires 10x improvements, not incremental gains

- Switching costs (learning new tools, migrating data, changing workflows) exceed perceived benefits

- Incumbent products integrate AI themselves, erasing your advantage

- Marketing becomes feature comparison rather than compelling vision

Prevention Strategy:

- Identify specific use cases where you’re 10x better, not 10% better

- Build defensible moats (proprietary data, network effects, workflow lock-in)

- Target underserved niches where incumbents don’t compete

- Create new categories rather than competing in established ones

4. Inconsistent Results and Reliability Problems

The Pattern: AI MVPs work beautifully in demos but fail unpredictably in real-world usage. Models handle common cases well but crash on edge cases. Results vary based on input phrasing. Users can’t predict when the AI will succeed or fail.

Why It Fails:

- Users need consistent, predictable outcomes to build trust

- Unreliable tools get abandoned after first few failures

- Unpredictable behavior creates anxiety rather than confidence

- Users can’t incorporate unreliable tools into important workflows

Prevention Strategy:

- Test extensively with real user data, not curated demo datasets

- Build transparency features showing AI confidence levels

- Provide user overrides and manual alternatives for failures

- Focus on narrow use cases where you can guarantee reliability

5. Monetization and Business Model Gaps

The Pattern: Founders defer monetization decisions until “after we have traction.” They focus on user growth metrics while ignoring whether users would actually pay. When they finally introduce pricing, users balk or churn because the value doesn’t justify the cost.

Why It Fails:

- Free users and paying customers have fundamentally different behaviors

- Willingness to pay validates true product value—usage alone doesn’t

- Late-stage monetization often reveals product-market fit never existed

- Revenue projections collapse when free users won’t convert

Prevention Strategy:

- Test pricing from day one, even during beta

- Run pricing experiments with mock paywalls to gauge willingness to pay

- Identify which features users would miss most if removed

- Validate that users quantify clear ROI (time saved, costs reduced, revenue increased)

6. Ignoring User Trust and Transparency Needs

The Pattern: AI MVPs operate as “black boxes” where users can’t understand how decisions are made. When AI makes mistakes, users get no explanation. There’s no way to verify accuracy or override bad decisions. This opacity destroys trust, especially in high-stakes applications.

Why It Fails:

- Users won’t delegate important decisions to systems they can’t understand or control

- Single unexplained errors destroy trust that took weeks to build

- Lack of transparency creates liability and compliance risks

- Professional users (doctors, lawyers, financial advisors) need explainability to take responsibility for AI-assisted decisions

Prevention Strategy:

- Build explainability features from day one showing reasoning

- Provide confidence scores and alternative suggestions

- Allow users to override AI decisions and provide feedback

- Design for graceful failures with clear error messages and recovery paths

7. Regulatory and Ethical Blindspots

The Pattern: Founders in healthcare, finance, legal, and other regulated industries underestimate compliance complexity. They build impressive technology that violates privacy regulations, lacks required certifications, or creates unacceptable liability risks.

Why It Fails:

- Regulated industries have gatekeepers who block non-compliant products

- Compliance retrofitting costs far more than building it in from the start

- Single regulatory violations can shut down companies entirely

- Ethical issues create PR crises that destroy credibility and funding

Prevention Strategy:

- Consult regulatory experts before building, not after

- Build privacy, security, and compliance frameworks from day one

- Conduct ethics reviews and bias audits throughout development

- Create audit trails and explainability features that support regulatory requirements

The AI MVP Success Framework: Building Products That Win

Step 1: Deep Problem Validation Before Any Development

Research Phase (2-4 Weeks):

User Interview Protocol:

- Conduct 20-30 in-depth interviews with target users

- Focus on current pain points and existing workflows

- Identify time/money spent on manual workarounds

- Discover emotional frustration levels and priority rankings

Key Questions to Validate:

- How are users currently solving this problem? (If they’re not, the problem isn’t painful enough)

- What’s the cost of the problem? (Time, money, opportunity, frustration)

- What alternatives have they tried? (Shows they’re actively seeking solutions)

- Would they pay for a solution? How much? (Validates monetization potential)

Evidence You Need:

- Users describe the problem in their own words consistently

- Multiple users experience the same pain point

- Users are already spending resources (time/money) trying to solve it

- Users can quantify the value of a solution

Red Flags to Watch:

- Users say it’s a “nice to have” rather than critical need

- Problem only exists because of your prompting, not organic frustration

- Users haven’t tried any alternatives (suggests low priority)

- Wide variation in how users describe the problem (suggests no clear common pain)

Step 2: Design Your Minimum Viable Experience (MVE)

Concept: MVE vs. MVP

Traditional MVP focuses on minimum features. MVE focuses on minimum experience that delivers complete value in one narrow but meaningful use case.

MVE Design Principles:

1. Single Use Case Mastery:

- Choose the most painful, frequent workflow

- Design perfect experience for that one thing

- Resist temptation to add adjacent features

- Measure success: does this one thing better than any alternative?

2. Value in First 5 Minutes:

- User understands what product does (30 seconds)

- User completes first valuable action (3 minutes)

- User experiences clear benefit (5 minutes)

- No setup, configuration, or training required

3. Trust-First Design:

- Show AI confidence levels clearly

- Provide “why” explanations for AI decisions

- Allow user overrides and manual alternatives

- Design for graceful, understandable failures

MVE Prototyping Options:

No-Code Validation:

- Wizard of Oz testing (humans behind the scenes simulating AI)

- GPT-4 API with simple interface for rapid iteration

- Existing tools chained together with Zapier/Make

- Manual processes to validate workflow before automation

Benefits:

- Validate value proposition before expensive engineering

- Iterate on UX rapidly without technical debt

- Test pricing and monetization early

- Fail fast if users don’t find value

Step 3: Build for Reliability and Edge Cases

Engineering Priorities:

1. Consistency Over Novelty:

- Predictable, repeatable results users can trust

- Graceful degradation when AI confidence is low

- Human-in-the-loop options for uncertainty

- Clear error messages and recovery paths

2. Edge Case Testing:

- Collect real user inputs from day one

- Test against adversarial and unusual inputs

- Monitor failure rates and patterns meticulously

- Prioritize robustness over feature expansion

3. Performance Benchmarking:

- Define acceptable accuracy thresholds before launch

- Compare against human baselines, not other AI

- Measure across diverse user demographics and contexts

- Track performance degradation over time

Quality Gates:

- 95%+ success rate on core use case before launch

- Sub-2-second response times for interactive experiences

- Zero critical failures (data loss, privacy breaches, dangerous recommendations)

- Clear documented limitations communicated to users

Step 4: Validate Monetization Early and Often

Pricing Validation Strategies:

Pre-Launch:

- Landing pages with pricing and “reserve” CTAs

- Waitlist segmentation by willingness to pay

- User interviews specifically about budget and ROI expectations

- Competitor pricing analysis and value positioning

Early Access:

- Charge from day one, even at beta pricing

- Offer tiered pricing to understand value perception

- Track feature usage to identify premium capabilities

- Conduct pricing surveys after users experience value

Key Metrics to Track:

- Conversion rate from free trial to paid

- Price sensitivity and optimal price points

- Feature adoption by pricing tier

- Customer acquisition cost vs. lifetime value

Business Model Validation:

- Users can quantify ROI (time saved × hourly rate, revenue increased, costs reduced)

- Payback period aligns with sales cycle expectations

- Unit economics support sustainable growth

- Multiple revenue opportunities identified (upsells, enterprise tiers, add-ons)

Step 5: Scale Strategic Differentiation

Competitive Moat Building:

1. Proprietary Data Advantages:

- Collect user feedback to continuously improve models

- Build datasets that competitors can’t easily replicate

- Create network effects where data improves with usage

- Establish partnerships for exclusive data access

2. Workflow Integration Depth:

- Integrate so deeply into workflows that switching is painful

- Create data lock-in through accumulated value

- Build ecosystem partnerships and integrations

- Develop workflow dependencies that compound

3. Domain Expertise and Trust:

- Become the recognized category leader

- Build thought leadership through content and community

- Develop industry certifications and compliance

- Create case studies and proof points

4. Technical Innovation:

- Develop proprietary models or fine-tuning approaches

- Optimize for specific use cases competitors ignore

- Build hybrid AI-human workflows competitors can’t match

- Patent defensible technical innovations

Step 6: Continuous Validation and Iteration

Metrics That Matter:

Product-Market Fit Indicators:

- Retention cohorts: 40%+ weekly retention after 8 weeks signals strong fit

- Organic growth rate: Word-of-mouth and referrals indicate genuine value

- Net Promoter Score: 50+ suggests users evangelizing your product

- Feature request patterns: Convergent requests indicate clear user needs

Business Health Signals:

- Customer Acquisition Cost (CAC) < 1/3 Lifetime Value (LTV)

- Churn rate < 5% monthly for B2B, < 10% for B2C

- Expansion revenue: Existing customers buying more over time

- Time to value: Users experience benefit within first session

AI Performance Tracking:

- Accuracy rates by use case and user segment

- Confidence calibration: Does confidence match actual accuracy?

- Failure pattern analysis: Which edge cases cause problems?

- User override frequency: High overrides signal low trust

Iteration Framework:

Weekly Reviews:

- User feedback triage and pattern identification

- Performance metric analysis and anomaly detection

- Competitive intelligence and market shifts

- Quick wins and bug fixes prioritization

Monthly Deep Dives:

- Cohort analysis and retention investigations

- Feature adoption and engagement analysis

- Pricing optimization and monetization experiments

- Strategic roadmap validation against user needs

Quarterly Strategic Assessments:

- Product-market fit evaluation and pivot decisions

- Competitive positioning and differentiation review

- Business model validation and optimization

- Technology stack and architecture assessments

Industry-Specific AI MVP Considerations

Healthcare AI: Navigating Regulation and Trust

Unique Challenges:

- HIPAA compliance and patient data privacy requirements

- FDA medical device classification and approval processes

- Clinical validation standards and peer review expectations

- Malpractice liability and insurance considerations

Success Strategies:

- Partner with established healthcare institutions for validation

- Build audit trails and explainability from day one

- Engage regulatory consultants before development begins

- Design for clinician augmentation, not replacement

- Invest heavily in accuracy and safety testing

Case Study Success: Viz.ai successfully navigated FDA approval by focusing narrowly on stroke detection, achieving 95%+ accuracy, and integrating seamlessly into existing clinical workflows. They validated with real-world clinical studies before scaling.

Financial Services AI: Balancing Innovation and Compliance

Unique Challenges:

- SEC, FINRA, and banking regulatory requirements

- Fair lending laws and algorithmic bias detection

- Audit trails and model explainability mandates

- Cybersecurity and fraud prevention standards

Success Strategies:

- Build compliance frameworks before features

- Conduct regular bias audits across demographic groups

- Maintain human oversight for critical decisions

- Document all model decisions and data sources

- Partner with established financial institutions for distribution

Case Study Success: Upstart secured bank partnerships and regulatory approval by demonstrating their AI lending models reduced default rates while expanding access to underserved populations, all while maintaining rigorous fair lending compliance.

Legal Tech AI: Accuracy and Liability Concerns

Unique Challenges:

- Professional liability and malpractice risks

- Attorney-client privilege and confidentiality requirements

- Unauthorized practice of law (UPL) restrictions

- Extreme accuracy requirements for legal precedent

Success Strategies:

- Position as attorney augmentation tools, not replacements

- Build robust citation and source verification systems

- Provide confidence scores and alternative interpretations

- Partner with law firms for validation and adoption

- Maintain human lawyer oversight for all advice

Case Study Success: Harvey AI achieved success by deeply integrating with existing legal workflows at elite law firms, focusing on research augmentation rather than advice generation, and maintaining rigorous accuracy standards with lawyer oversight.

Education AI: Pedagogical Effectiveness and Ethics

Unique Challenges:

- FERPA student privacy regulations

- Pedagogical effectiveness validation requirements

- Equity concerns and digital divide considerations

- Age-appropriate content and safety requirements

Success Strategies:

- Validate learning outcomes with controlled studies

- Build adaptive systems that personalize to student needs

- Design for teacher empowerment, not replacement

- Address equity and accessibility from day one

- Partner with schools and districts for pilot programs

Case Study Success: Khan Academy’s AI tutor (Khanmigo) succeeded by validating learning outcomes through research partnerships, integrating with existing curricula, and designing for teacher oversight and student privacy.

The AI MVP Success Checklist

Pre-Development Phase

Problem Validation:

- ✅ Conducted 20+ user interviews identifying consistent pain points

- ✅ Validated users currently spend time/money on workarounds

- ✅ Confirmed users can quantify value of solving the problem

- ✅ Established clear willingness to pay thresholds

Market Analysis:

- ✅ Analyzed competitive landscape and identified differentiation

- ✅ Confirmed 10x improvement potential on specific use cases

- ✅ Identified defensible moats (data, workflow, expertise)

- ✅ Validated market size supports venture-scale opportunity

Technical Feasibility:

- ✅ Confirmed AI can actually solve the validated problem

- ✅ Identified required accuracy thresholds and benchmarks

- ✅ Assessed data availability and quality requirements

- ✅ Evaluated regulatory and compliance considerations

Development Phase

MVE Design:

- ✅ Defined single use case for initial focus

- ✅ Designed user experience delivering value in 5 minutes

- ✅ Built trust-first features (explainability, confidence, overrides)

- ✅ Created onboarding requiring zero AI knowledge

Technical Build:

- ✅ Achieved 95%+ accuracy on core use case

- ✅ Tested extensively on real user data and edge cases

- ✅ Built monitoring and alerting for performance degradation

- ✅ Implemented security, privacy, and compliance frameworks

Business Model:

- ✅ Defined pricing strategy based on value delivered

- ✅ Tested willingness to pay with beta users

- ✅ Validated unit economics and growth model

- ✅ Identified multiple revenue opportunities

Launch Phase

Go-to-Market:

- ✅ Identified target customers and acquisition channels

- ✅ Created content demonstrating clear value proposition

- ✅ Established success metrics and tracking infrastructure

- ✅ Built feedback loops for continuous improvement

User Onboarding:

- ✅ New users experience value within first session

- ✅ Onboarding requires minimal setup or configuration

- ✅ Clear documentation and support resources available

- ✅ Feedback mechanisms for problems and suggestions

Performance Monitoring:

- ✅ Real-time dashboards tracking key metrics

- ✅ Automated alerts for performance issues

- ✅ User feedback collection and analysis systems

- ✅ Competitor monitoring and market intelligence

Post-Launch Phase

Retention and Growth:

- ✅ Weekly retention cohorts showing 40%+ retention

- ✅ Net Promoter Score above 50

- ✅ Organic growth from word-of-mouth and referrals

- ✅ Expansion revenue from existing customers

Continuous Improvement:

- ✅ Regular user interviews identifying pain points

- ✅ A/B testing on key features and flows

- ✅ Model performance improvements and updates

- ✅ Competitive analysis and strategic adjustments

Common Myths About AI MVPs Debunked

Myth 1: “You Need the Best Model to Win”

Reality: Success depends far more on UX, reliability, and problem selection than model sophistication. Many successful AI products use relatively simple models (or even GPT API) but excel at identifying valuable problems and creating delightful experiences.

Evidence: Jasper.ai built a $1.5B valuation primarily using OpenAI’s API with excellent UX and marketing, not proprietary models. Grammarly succeeded with traditional ML for years before adding LLMs.

Myth 2: “First Mover Advantage Is Everything”

Reality: In AI, fast followers often win by learning from pioneers’ mistakes. Better execution, superior UX, and clearer positioning beat early launch dates.

Evidence: Google Bard launched after ChatGPT but captured significant market share through better integration and reliability. Claude succeeded by focusing on safety and consistency rather than being first.

Myth 3: “AI Products Must Be Cheap or Free”

Reality: Users happily pay premium prices for AI products that deliver clear, quantifiable value. Enterprise AI tools command substantial prices when ROI is obvious.

Evidence: GitHub Copilot charges $10-19/month with millions of paying users. Jasper charges $39-125/month. Midjourney charges $10-120/month. All have strong retention because value exceeds price.

Myth 4: “You Need Proprietary Data to Compete”

Reality: While proprietary data helps, many successful AI companies start with public data and build advantages through superior product design, customer relationships, and domain expertise.

Evidence: Most successful AI writing tools use the same underlying models. Differentiation comes from UX, workflow integration, and brand trust—not exclusive data.

Myth 5: “AI Will Replace Human Workers”

Reality: The most successful AI products augment human capabilities rather than replace them. Products positioned as “human replacement” face resistance; those positioned as “human empowerment” get adopted quickly.

Evidence: Copilot helps developers code faster—doesn’t replace them. Jasper helps marketers write more—doesn’t eliminate the role. The pattern holds across successful AI products.

Frequently Asked Questions About AI MVP Development

How long should AI MVP development take?

Most successful AI MVPs take 3-6 months from concept to initial launch. However, the timeline should prioritize validation over speed. Spend 4-6 weeks on problem validation, 6-8 weeks building your Minimum Viable Experience, and 4-6 weeks on early user testing before broader launch. Rushing to market without validation causes most failures.

How much should I expect to spend building an AI MVP?

Costs vary dramatically based on complexity. Simple AI products using existing APIs (like GPT-4) can launch for $25,000-$75,000. Custom ML models requiring data collection and training typically cost $100,000-$300,000. Highly regulated industries (healthcare, finance) often require $300,000-$1M+ for compliance and validation. Focus spending on problem validation and UX before expensive model development.

Do I need a technical co-founder for an AI startup?

While not absolutely required, having deep technical expertise on your founding team dramatically increases success odds. AI products require nuanced understanding of model capabilities, limitations, and reliability considerations. If you don’t have a technical co-founder, hire a fractional CTO or senior AI engineer early, and invest heavily in your own technical education.

What’s the difference between an AI MVP and traditional MVP?

AI MVPs require additional focus on reliability, transparency, and trust that traditional MVPs don’t. Users expect software to work predictably; AI can fail unpredictably. You must design for explaining AI decisions, handling failures gracefully, and building trust gradually. Traditional MVPs can launch with bugs; AI MVPs with accuracy problems get abandoned immediately.

How do I know if my problem actually needs AI?

Ask: “Could this be solved with rules, logic, or traditional software?” If yes, start there—it’s simpler, more reliable, and easier to debug. AI makes sense when you need to handle unpredictable inputs, make probabilistic decisions, understand natural language, or process complex patterns. Don’t use AI just because it’s trendy; use it because it’s the best tool for your specific problem.

What accuracy rate do I need before launching?

Depends entirely on your use case. Content generation can launch at 70-80% accuracy with human editing. Fraud detection needs 95%+ accuracy to avoid false positives. Medical diagnosis requires 98%+ accuracy for safety. The key question: what accuracy makes your product useful enough that users trust it, while you clearly communicate limitations?

How do I compete with big tech companies building similar AI products?

Focus on specific niches, superior UX, and domain expertise. Big tech builds horizontal platforms; you can build vertical solutions that deeply understand specific industries. Compete on customer intimacy, not R&D budget. Companies like Jasper, Notion, and Harvey compete successfully by focusing on specific user workflows and delivering exceptional experiences in narrow domains.

Should I build my own models or use APIs like OpenAI?

Start with APIs unless you have clear technical differentiation requirements. APIs let you validate product-market fit quickly and cheaply. Build custom models only when: (1) APIs don’t meet your performance requirements, (2) Your proprietary data provides competitive advantage, (3) Cost at scale justifies the development investment, or (4) You need capabilities not available via API.

How do I price my AI product?

Price based on value delivered, not development cost. Calculate customer ROI (time saved, revenue increased, costs reduced) and capture 10-30% of that value. Test multiple price points early. Consider tiered pricing (freemium, professional, enterprise) to capture different willingness to pay. B2B AI products typically charge $50-$500+ per user monthly; B2C charges $10-$50 monthly.

What metrics should I track for an AI MVP?

Track both standard product metrics and AI-specific metrics. Standard: activation rate, retention cohorts, Net Promoter Score, conversion to paid. AI-specific: model accuracy by use case, confidence calibration, user override rate, failure patterns, time to value. Most important: retention—if users don’t come back, nothing else matters.

Conclusion: Building AI Products That Last

Understanding why AI MVPs fail provides a crucial roadmap for avoiding the same pitfalls that destroyed billions in venture capital and countless founder dreams. The pattern is clear: successful AI products don’t start with technology—they start with deeply validated user problems and build AI as the optimal solution.

The AI companies that survive and thrive in 2025 and beyond will be those that:

Prioritize problems over technology. They identify painful, expensive problems that users desperately want solved. AI becomes a tool to deliver solutions, not the reason the product exists.

Build trust through transparency. They design systems users can understand, verify, and override. They communicate confidence levels honestly and handle failures gracefully. Trust compounds; opacity destroys.

Focus on reliability over novelty. They deliver consistent, predictable results users can depend on for important workflows. They test extensively on real data and edge cases before launching broadly.

Validate monetization early. They establish willingness to pay from the beginning, ensuring their product delivers quantifiable value worth paying for. Free users validate interest; paying customers validate businesses.

Differentiate meaningfully. They build 10x better experiences in specific use cases rather than marginal improvements in crowded categories. They create defensible moats through data, workflows, or expertise that compound over time.

The AI revolution is real, but it rewards substance over hype. The founders who succeed won’t be those who launch first or raise most—they’ll be those who build products users genuinely need, trust completely, and can’t imagine living without.

Your AI MVP doesn’t need to be revolutionary. It needs to be:

- Solving a real, validated problem

- Delivering consistent, reliable value

- Easy to understand and use

- Demonstrably worth paying for

- Defensibly different from alternatives

Start there. Validate ruthlessly. Build thoughtfully. Scale strategically. The AI gold rush continues, but the lasting companies will be those that pan for real value, not just glittering hype.

Ready to build an AI product that succeeds?

Start with problem validation, not technology exploration. Spend your first month understanding user pain points deeply. Build your Minimum Viable Experience focused on reliability and trust. Validate monetization before scaling. The companies that follow this framework won’t just survive the AI shakeout—they’ll define the next generation of truly valuable AI products.

Want expert guidance on your AI MVP strategy? Connect with founders and product leaders who’ve successfully navigated the AI product journey. Your success starts with learning from others’ failures—and building something genuinely valuable.